Thales Crawley have turned Year 6 Pupil Scarlett’s Sign Language Translator into a reality

Introduction to the partnership

Thales are one of our National Partners for our annual engineering competition which asks pupils aged 3-19 ‘If you were an engineer what would you do?’ and encourages pupils come up with creative solutions to real-world problems.

There are 5 Thales teams from various sites around the UK who will each be working on one of the 5 pupil engineering ideas selected to develop into a prototype over the 2023/2024 academic year which will be unveiled at the Awards Ceremony and Public Exhibition during the Summer term.

The prototeam

The Sign Right Translator is a tablet housing an original sign language translating application enhanced using machine learning and cloud computing. Via the camera input, the device aims to recognise, detect and capture the signs which are then translated and outputted visually on the screen. Inspired by the student’s original drawings, the tablet has a variety of unique cases to further display their creativity.

The prototype team is formed of a mixture of graduates and apprentices, from various disciplines, all based at Thales’ Crawley site. The team members are utilising their volunteering hours to help bring the student’s design to life, ensuring to fully capture the student’s vision but assessing what creative adaptions we could implement as part of the project.

Beginning the prototype

During the planning stage, a simple but effective project management structure was defined, allowing the team to follow and utilise the Scrum approach to product development, just one of the many agile methodologies. The Scrum Master and Product Owner worked together to outline the major milestones and creating a plan on a page. This helped to ensure the team stays focused throughout the project and key stakeholders were updated regularly about the milestones and progress

During the setting up phase they took a service design approach to better understand the users, the design presented to them and the feasibility of creating the product exactly as described. They created personas to help define the primary and secondary users, use cases and user stories to guide them in the development of the product. During this stage they defined the baseline requirements from the drawings they received along with optional requirements, allowing them to introduce some creative aspects to both the hardware and software created without compromising the designer’s original ideas.

Design Phase

Hardware Update

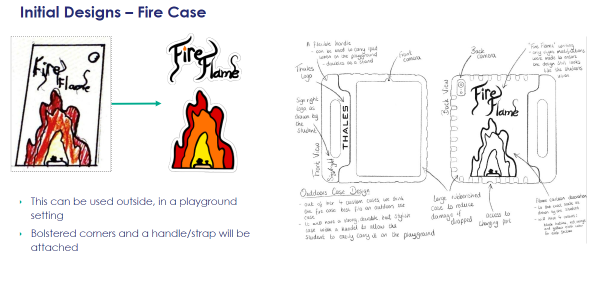

In hardware they started with extracting the student’s designs from the initial specification and digitalising them, they then investigated different ways that they could implement these into the custom cases. When exploring use cases, they looked into the different environments where the device would be used. They decided to design four different cases (see 2 of our designs below), one for each operational environment and implement additional hardware to improve both functionality and ergonomics specific to each one. Following this they worked on implementing her artwork onto the cases in a way that complimented the new hardware while staying true to the student’s vision. The team then moved into the prototyping phase where we they integrated the designs and hardware onto the cases and analysed different types of manufacturing processes to create the final cases.

Software Update

In software, they started by researching Sign Language Translation (SLT) methods, focusing on which sign languages (BSL, ASL, etc.) have the most developed research and papers. They explored different AI models, comparing both CNN-based and 3D-Pose-based networks, and analysed their performance in terms of accuracy and speed. They investigated potential platforms for the client application, ultimately determining that an iPad mini with iPadOS would be the most appropriate choice. In designing the UI wireframes for the client application, they focused on requirements specific to child users, ensuring the interface is intuitive and engaging. They developed a local API endpoint that takes a video clip as input and responds with translated captions for each frame. This endpoint was tested locally for performance and accuracy. Following successful local testing, they deployed the API endpoint to the cloud with additional GPU compute to accelerate translation performance. Additionally, they developed the client application using the Flutter framework, incorporating an intuitive UI and playful animations to enhance the user experience.

Prototype unveiled!

The prototype was unveiled at the South East awards on 20th June hosted at Canterbury Christ Church University. Huge well done to the Thales UK Crawley Prototeam.